The Quad: Facial recognition faces myriad issues before it can be deployed successfully

(Zoë Vikstrom/Daily Bruin staff)

By Amanda Houtz

Feb. 28, 2020 12:52 a.m.

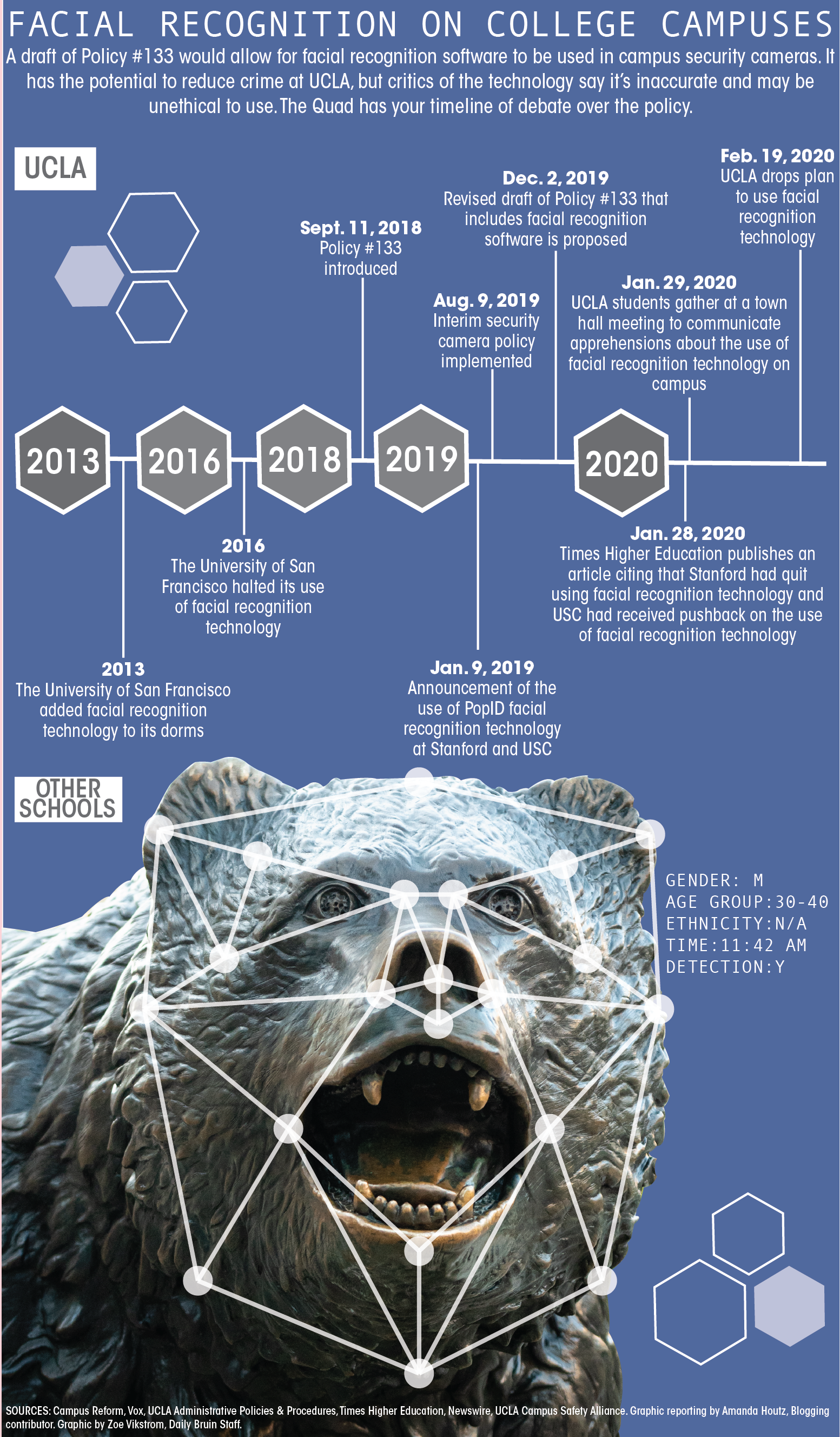

The debate over the use of facial recognition software on college campuses has reached UCLA, and the arguments over the technology’s pros and cons have come to a head.

UCLA joined this debate with the introduction of a revised version of Policy 133 last year that called for the integration of facial recognition technology into campus security cameras. Interestingly enough, the conversation between the proponents and opponents of the technology’s implementation at UCLA has paralleled the broader conversation that has occurred about this subject around the nation.

The technology in question – facial recognition software – identifies individuals by comparing precise facial measurements gathered from camera images to photos of individuals that are stored in a database. The measurements, which include details such as a person’s jawline length, are used to create a template that then is compared to preexisting photos like mugshots and social media posts, according to the American Bar Association.

UCLA’s proposal to integrate this technology into its security system was considered in the wake of several alarming instances of criminal activity on campus this academic year. Among these events were burglaries, sexual batteries and an alleged armed assault.

Nonetheless, UCLA ultimately rejected its own plan Feb. 19 following a January town hall meeting in which participants raised many concerns about the technology’s implementation.

[Related: UCLA decides not to implement facial recognition technology after student backlash]

Today, facial recognition technology has gained a reputation of being controversial because of the many pros and cons associated with it.

One of the main rallying points of the use of this technology is its ability to deter crime. Facial recognition technology can be used to catch perpetrators of past crimes or to detect the presence of wanted criminals or terrorists. The latter function has the potential to stop crime in its tracks – a feature cameras not connected to facial recognition technology and the untrained eye both lack.

There are also more niche uses of this technology, which include identifying habitual gamblers, welcoming hotel guests, taking school attendance and uncovering underage drinkers, according to the ABA.

However, like most surveillance technology, the benefits are accompanied by consequences. One issue that has arisen with facial recognition technology is misidentification.

The American Civil Liberties Union conducted a test using Amazon’s facial recognition software Rekognition in August that incorrectly identified 26 out of 120 screened lawmakers as suspected criminals.

Perhaps more alarming than the technology’s possible propensity for general error, however, is the technology’s bias in its misidentification.

The National Institute of Standards and Technology ran a study on various facial recognition algorithms that yielded the discovery of patterns of discrimination in some face-matching algorithms. In certain algorithms, Asian and African American faces were misidentified 10 to 100 times more than Caucasian faces in one-to-one matching, and African American females had higher instances of false positives in one-to-many matching.

The findings of this study were further expanded upon by a study at the University of Colorado, Boulder that detailed how facial analysis technology unequally handled nonbinary, transgender and cisgender images and could not correctly identify nonbinary people.

The dangers of these proven biases are only heightened when considering the technology’s use in fields as consequential as the criminal justice system. According to the ABA, data gathered by these systems might soon be used as evidence in court – making concerns about the technology’s accuracy even more pressing.

The problems with the technology’s use in practice are emphasized with broader concerns about the technology’s constitutionality. It has been argued that the use of this technology is a violation of the First and Fourth Amendments because of the following of an individual’s movements and the underlying encouragement of self-censorship.

In light of these concerns, activist groups have arisen to stop the spread of facial recognition technology.

Fight For The Future is an organization whose mission espouses technology as a force for empowerment rather than oppression. This organization was successful in preventing the implementation of facial recognition technology at major concerts and festivals like Coachella. Now, Fight For The Future has partnered with Students for Sensible Drug Policy to deter the technology’s emergence on college campuses.

The movement away from facial recognition technology on college campuses is highlighted by the removal of this technology on campuses such as the University of San Francisco.

USF introduced this technology as a way to separate students from nonstudents entering dorms using the student ID photos in its system. This measure seemed like a good idea on a city-centered campus where nonstudents are indistinguishably intermixed with students. However, the university said it stopped using this technology in 2016, as it did not fulfill its requirements.

Away from university life, New York’s Lockport City School District recently implemented facial recognition technology at its eight local schools in an attempt at added safety. Specifically, the system promises to keep track of the entrance of people who are not supposed to be on campus, to alert security personnel of their presence and to screen for guns.

However, the same concerns found at the university level – like invasion of student privacy and racial misidentification bias – are echoed in this community. Not only does this show the wide scope of the controversy, but it also shows the wide applicability of the concerns arising from it.

Though UCLA’s effort to implement this technology was thwarted after students voiced their concerns, schools do not have to receive student approval to implement these kinds of security measures. Concerns over privacy therefore have the potential to be further inflamed if addressed without student consent.

The widescale implementation of this technology thus seems unlikely – at least until the system’s errors can be addressed and a broader consensus can be achieved regarding its usage.