Q&A: Professor expounds on research involving machine consciousness

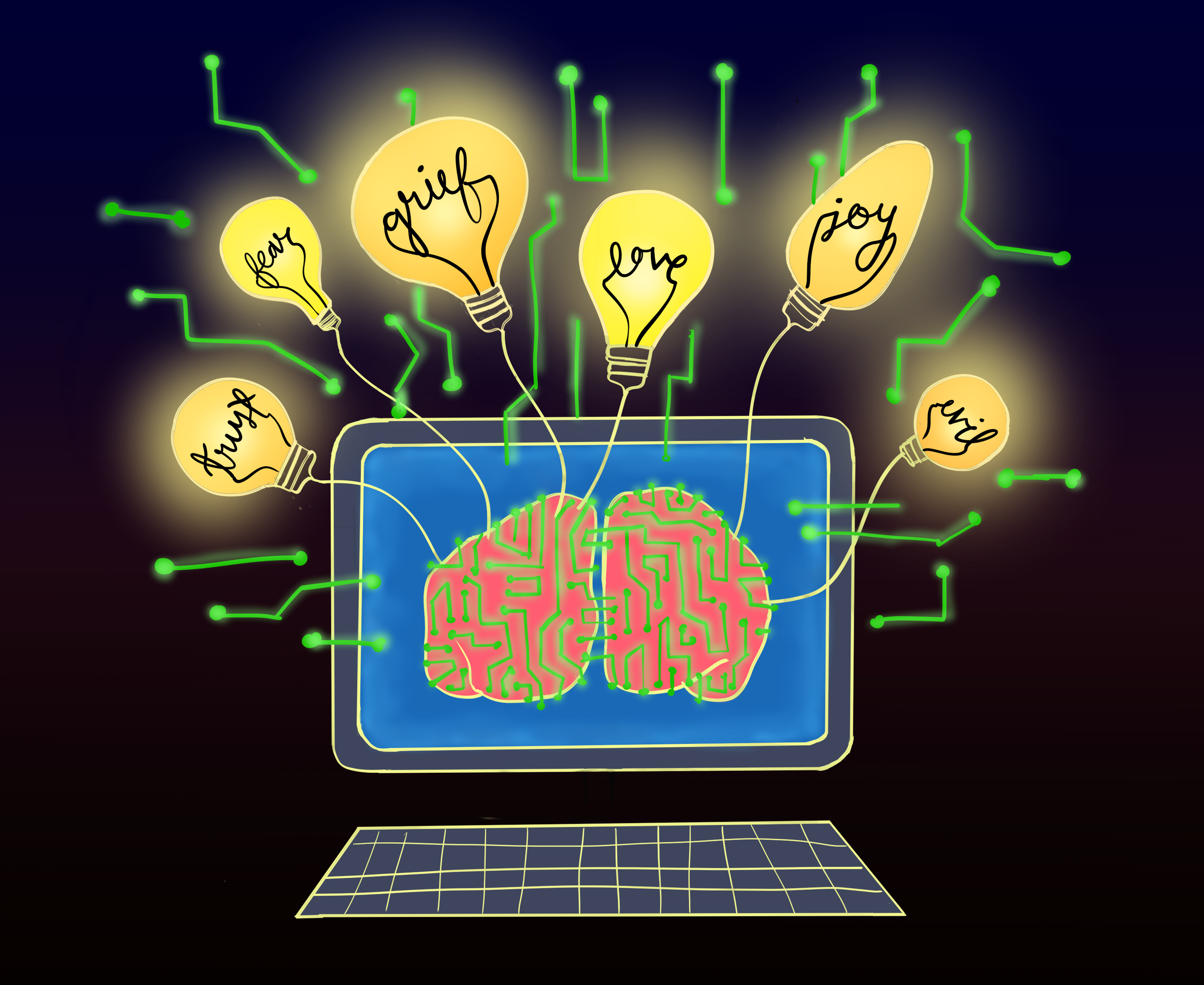

(Rachel Bai/Daily Bruin)

By Emi Nakahara

Nov. 14, 2017 1:54 a.m.

Hakwan Lau, a psychology professor at UCLA, co-authored an article published in the magazine Science last month that looked into whether machines could ever achieve consciousness. The Daily Bruin’s Emi Nakahara spoke to Lau about his research on consciousness and artificial intelligence, and whether a computer could ever be as conscious as the human brain.

Daily Bruin: What is your general research interest?

Hakwan Lau: I have an undergraduate background in philosophy and a Ph.D. in neuroscience. I study consciousness; I study how the brain supports some processes that are conscious and some processes that are unconscious and the difference between those two processes.

DB: Why did you decide to pursue this research idea on consciousness?

HL: Artificial intelligence has become very popular and exciting again, and consciousness has been an age-old problem. I didn’t invent this problem myself, you can trace it back to philosophers such as Descartes. I was a philosophy student and defining consciousness was the most challenging philosophy problem of all time, so I was interested in it.

DB: What are your most significant findings from your study?

HL: So this was a co-authored paper, and it wasn’t exactly an original research paper per se, it was more like reviewing the state of the field (on consciousness and AI research). I think a problem is a lot of people in AI either explicitly or implicitly have some beliefs about whether machines can be conscious or not: They either say yes, AI is making so much progress that eventually it can simulate the whole brain and any kind of psychological function can be built into machines. Other people think no, AI is very limited and it can only do certain kinds of specific things but they can never truly be human. Usually when we ask why, that’s when it gets a bit messy.

We as brain scientists thought we can make ourselves useful here by reviewing what we already know about the human brain and what processes are conscious and what processes are not. So why don’t we look at them, and try to translate them into more mechanistic terms and think about what kind of computer mechanism would mimic the human brain’s conscious and unconscious mechanisms? Then we can start to think about how we make a machine that is conscious.

DB: How do you define consciousness apart from AI?

HL: AI is a whole field of trying to build machines that can do smart things. Consciousness is just one aspect of the mind, so it’s not the same. In the paper, we define consciousness the way reviewed scientific literature describes it, which is quite different from what you would normally think about. Usually we think about consciousness as in you are awake, and when you’re passed out in dreamless sleep, you’re unconscious. This common definition is relatively simple.

What’s more interesting is how consciousness is defined by the sense of having a qualitative (instead of quantitative) feel from sensory perception. When you see a color like red, it’s more than just registering some wavelength numbers, you’re actually enjoying a certain visual experience in the redness looking a certain way. Computers don’t have this kind of processing.

DB: What was your conclusion on whether machines could ever be conscious?

HL: We didn’t exactly say computers can definitively be conscious, but I think it’s at least worth a try to create conscious computers, and our data does not reject the notion that they can never be conscious.

Currently, a successful algorithm in AI that got everyone excited is called deep learning. Deep learning is basically a new version of neural network pattern recognition. So, machines can recognize patterns such as faces and handwriting. This type of learning is mostly done unconsciously in the human brain, so that would mean the most current algorithms are not likely to be conscious like human brains.

However, we also speculate that we know what types of computations are supported by conscious human processing, and those computations can be done in machines as well. Near the end of the article we reviewed these possibilities.

We didn’t make a very strong statement, we just said: “Look, if anything, it looks like it can be done but it hasn’t been fully done yet and maybe we should give it a try.”