UCLA researchers discover new limits of machine learning

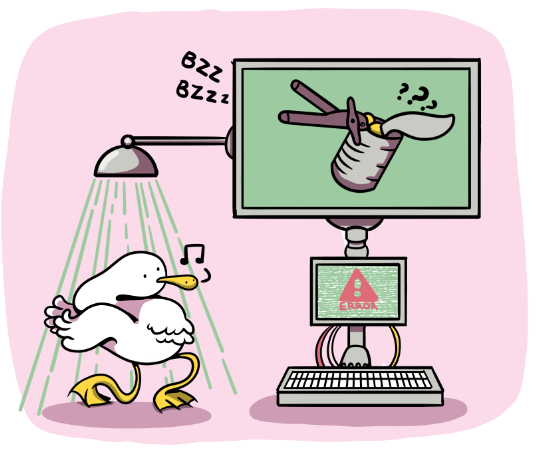

(Michelle Fu/Daily Bruin)

By Zhichun Li

Jan. 24, 2019 11:30 p.m.

UCLA researchers found the limits of deep learning networks – distinguishing between an otter and a can opener.

Nicholas Baker, a cognitive psychology graduate student, explored the behaviors of two machine learning networks known as convolutional neural networks, which are well-established machine learning networks capable of visual recognition. The study aimed to test whether the networks are able to visualize automatically in the same way that humans are able to.

“Recognizing by shape is a very abstract task,” Baker said. “There is a lot of symbolic processing between what you initially see in sensation and what you encode in a more durable format, and we think this might be a very hard thing to do for neural networks.”

When humans look at an object, the brain separates it from its background using the outline of the shape and encodes the shape into a representation it can understand. On the other hand, deep learning networks take images and classify them into categories such as “polar bear” and “can opener,” according to how likely they are to fall into those categories, Baker said.

For humans, the process of recognizing objects consists of multiple distinct tasks. However, the deep learning network recognizes objects through only associating their images with a corresponding category.

In addition, the CNNs are not explicitly coded to perceive broader, generalized features as humans do, so they fail to recognize objects that are very easily identified by humans.

Image textures can often override the shape of the object and confuse the networks. When a network is presented a teapot with the texture of a golf ball, the network predicted there was a 38.75 percent chance the object was a golf ball and a 0.41 percent chance it was a teapot. Similar misclassifications occur when testing objects such as a gong-textured vase.

The network focuses heavily on local details of objects instead of the overall shape of objects and frequently fail to recognize “vague” representations of objects that would be obvious to human observers.

“You wouldn’t record all individual wisps of the cloud,” Baker said. “You would encode the ‘global shapes’ of the cloud, the big features.”

A simplified bear silhouette, in which the outline of the bear is reduced to piecewise straight lines and curves, would confuse the networks. The networks would likewise experience the same effect with a roughly sketched representation of a piano and other simple silhouettes of objects that are typically identified by humans.

The CNNs are also confused by silhouettes of objects whose contours were destroyed and changed into zigzagged lines.

Experiments show, however, the deep learning networks’ focus on local features can help them correctly classify scrambled images. When parts of the objects are randomly moved around, the networks can still often provide correct predictions. For example, when an image of a camel is changed to show one leg horizontally attached to its back and its tail flying in front of its head, the network can still correctly identify the object as a camel.

Hongjing Lu, a professor of psychology and author of the paper, said the deep learning network is not as reliable as human visual recognition.

“What is missing there, is this robust system of recognition,” Lu said.

A small change in a local feature of the object that a human observer could easily ignore could completely change the prediction of the deep learning networks. Lu said the network can be used for constrained and specific tasks but currently should not be solely relied on for more complicated tasks such as decision-making in self-driving cars.

The results of this study suggest that breaking the image recognition process into multiple tasks could improve the deep learning algorithms, Lu said.

Jonathan Kao, an assistant professor of electrical and computer engineering who studies deep learning, said it is crucial to know how the networks can be tricked.

“It is important to know the vulnerabilities of neural networks so that the networks can be improved,” he said.