The Quad: College ranking systems, categorized and (somewhat) demystified

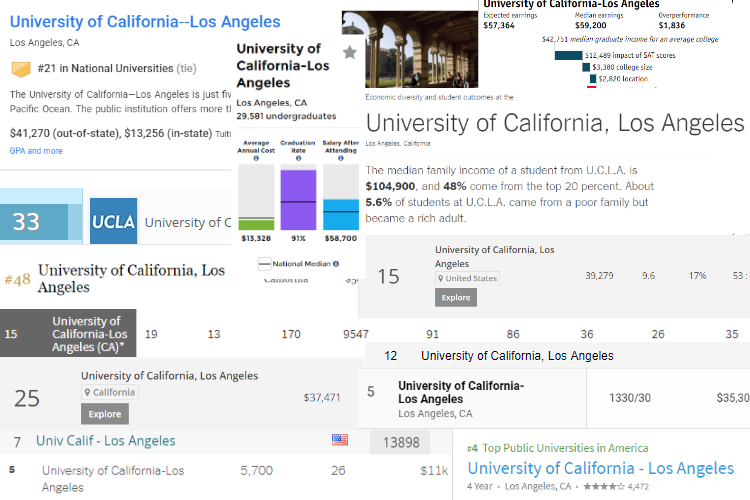

More than 15 publications and organizations attempt to evaluate colleges and universities in the United States and around the world. UCLA is ranked anywhere between first and 48th. (Arthur Wang/Daily Bruin senior staff)

By Arthur Wang

Nov. 2, 2017 5:27 p.m.

Fall is here, so that means it’s time for a deluge of college rankings to drop like the leaves never do in southern California.

The most recent update to our oversaturated college rankings landscape is the 2018 iteration of U.S. News and World Report’s “Best Global Universities” ranking, released Oct. 24. It’s not to be confused with its far more notable “Best National Universities” ranking of undergraduate-serving institutions in the U.S. Horrifyingly, these are only two of the many, many different lists published by the magazine, whose continued existence appears to be heavily dependent on churning out and updating lists.

U.S. News, of course, is not the only player in this game, although it is easily the most well-known, having created the first modern college ranking in 1983. In the current cash-poor digital age of journalism, publications large and small continue to jump into the rankings fray to rake in the all-but-guaranteed web traffic that rankings generate. The Economist and The Wall Street Journal, which are better known for their coverage on business than on higher education affairs, are two examples of unconventional college tabulators.

With more than 15 different college rankings published by magazines and organizations of some repute, we may have reached peak college rankings. More concerning than the overwhelming quantity is that the quality, methodology and philosophy of each ranking is different, yet not always obvious to readers.

Evaluating the evaluators

Higher education rankings are deeply, if not fatally, flawed assessments of colleges and universities, even if they are seen as authoritative. Sociologists, particularly those who study organizational behavior, have been at the forefront of explaining what rankings do to the perception of schools and how administrators react to the numbers. For example, they find that rankings reinforce old hierarchies that have long been present in higher education. The media and the college-going public grant vast legitimacy to rankings, while conflicted administrators disdain their methodological criteria despite flaunting any positive showing in one. Administrators feel forced to respond and enact changes to university policy that are tailored to rankings criteria. This is known as “gaming” the rankings, and it can happen regardless of the benefit to students and despite the fact that single-digit differences between ranks are trivial and insignificant.

Not surprisingly, academics are not the only critics of college rankings. A 2014 Boston Magazine article on Northeastern University’s completely shameless quest to climb the U.S. News rankings by spending billions on new buildings and schmoozing with top administrators has become one of the more notable pieces of the anti-rankings literature. In a more direct critique of, again, the U.S. News ranking, Politico found that universities that did more to improve accessibility and socio-economic diversity were actually penalized. This appeared after The New York Times published a “College Access Index” – a ranking in itself – showing how much, or how little, top American colleges were doing to reduce social inequality.

To assert that rankings are pointless, though, does nothing to abate their enormous popularity and felt influence for wary administrators. What follows is a breakdown of the predominant types of the most popular college rankings out there – a typology of rankings, so to speak. This is not a ranking of rankings, but an effort to demonstrate the different approaches and perspectives that are used to evaluate some or all aspects of universities.

“Holistic” rankings

Examples: US News National University Rankings, The Princeton Review Best Colleges, Niche, WSJ/Times Higher Education US College Rankings, Washington Monthly

These generalist rankings evaluate schools with a variety of criteria in an attempt to create a general ranking of “best” schools. Using an expansive rubric that evaluates disparate facets of a university is a bid for legitimacy and authoritativeness – on the surface, rankings that measure more will yield a comprehensive and holistic assessment of university quality as a whole.

Purported authoritativeness aside, these rankings are very popular for prospective undergraduates and their parents as they consider elements such as student satisfaction, student-faculty ratio, “quality of education” and other criteria thought to be important indicators of the quality of the undergraduate experience – which is by no means just an academic one. A school’s selectivity is often used as a proxy for its quality, even if other attributes, such as the quality of instructors or average classroom sizes, can be equally or even more valuable.

In its first release in 1983, US News published a purely reputation-based ranking. Reputation is usually measured by sending surveys to college administrators (and sometimes, counselors at prestigious private high schools) and asking them to rate colleges on some sort of scale. Reputation is no longer the only thing considered, as a wealth of rather useful information now supplements the reputation factor, but holistic rankings still weigh reputation heavily, even if that is not explicitly present in the methodology (since student-centric rankings, for instance, can be easily affected by how prestigious they believe their school is). As such, rankings reinforce existing status hierarchies in higher education. A prestigious school does not need to do very much to maintain its reputation, while a lesser-known school needs to get very lucky or spend a fortune on marketing to rise even modestly. Or it could do what Northeastern did.

The chosen factors and weights assigned to each criterion can change arbitrarily for holistic rankings in particular. There is no way to scientifically assess changes in rank or even institutional quality over time by comparing rankings, since there is no longitudinal consistency in methodology. This dynamic resembles the unresolvable debates over which player or particular team campaign is the “greatest of all time” in the history of a professional sports league, because the organizers of each sport constantly change the rules to keep the competition interesting. This is essentially what U.S. News has done with its ranking, although the reputation component ensures that the very best schools will remain in their spots in seeming perpetuity.

Global reputation rankings

Examples: QS World Universities Ranking, WSJ/THE World University Ranking

Global reputation rankings are the internationally-oriented versions of the US News ranking. This is one of two archetypes of global rankings, with the other weighing research more heavily than reputation. In both cases, schools from the West usually best those from the Rest, because these rankings are essentially interpretations of the structural and historical advantages that afford more prestige to universities in the English-speaking world and Western Europe. A prestige hierarchy is reproduced in a cyclical fashion when, for example, top scholars are attracted to – or wooed by – schools already acknowledged to be prestigious. The historical lineage of the modern university to their medieval counterparts in Europe also somewhat indirectly benefits the reputation of European and Anglo institutions.

Research-oriented rankings

Examples: Academic Ranking of World Universities, CWTS Leiden Ranking

Quite a few college evaluators seek to avoid the tricky and Anglocentric element of reputation by emphasizing the importance of research conducted at universities as a predominant rankings criteria. Such lists consider fewer criteria than the holistic tabulations. Yet in these rankings, the same old names seem to rise to, and remain on, the top year after year.

As it turns out, schools that are prestigious are also the ones with the most resources and pull to draw in the greatest number of top researchers. Schools in wealthy and English-speaking countries are on top, thanks to governments capable of supplying federal funding for large research projects and the institutions’ abilities to produce scholarship in English, academia’s lingua franca.

The Shanghai-based Academic Ranking of World Universities, which claims to be the first global university ranking, has never ranked Harvard lower than the number 1 spot. In fact, the competition is not even close. While Harvard always receives the benchmark-setting 100-point score, the second-best school has never pulled closer than a score of around 80. One would be led to believe by the ARWU ranking that all schools are at least 20 percent inferior to Harvard.

ROI rankings

Examples: Forbes, TIME/Money, College Scorecard

Since the cost of attending a four-year university has increased dramatically in recent decades, some rankings have decided to capitalize on these financial concerns by emphasizing the potential costs accrued and economic value gained by attaining a degree from a particular school, rather than the quality of education. If the holistic rankings approach undergraduate education as an experience, these rankings look at college as an investment that should yield satisfactory monetary returns.

This use of neoliberal economic logic toward higher education has become popular for many reasons, but the massification of higher education, massive increases to tuition at both public and private schools and the dramatic expansion of services and amenities on campuses that have given rise to a consumer mindset are key factors. The Obama administration lent the return-on-investment mindset of college a lot of legitimacy by letting users sort schools by post-graduation earnings and marginal income over high school graduates on its College Scorecard tool.

Single-issue rankings

Examples: NYT College Access Index, The Economist, Equality of Opportunity Project

Rankings that want to make a statement about the state of higher education are more likely to be single-issue. This does not mean that only one criteria is evaluated, but that all the factors serve to tell the same story. They may not even be considered college rankings in the conventional sense, because they do not attempt to “holistically” evaluate schools, but any sortable list can be considered a ranking.

Two lists published by the New York Times, both coupled with commentaries about how colleges impede or encourage access and socioeconomic mobility, are notable examples. The Economist published a true single-issue ranking in 2015, only measuring the margins of expected and actual earnings for graduates of each school. Given that the rankings were partially calculated based on an assumption that political progressives and marijuana enthusiasts are less likely to pursue high-paying jobs, the entire list reads as a borderline satire of other rankings. More reason to pay attention when you look into any list of colleges.

Graduate school rankings

Examples: National Research Council, US News Graduate Schools

To nobody’s surprise, there are ongoing efforts to rank graduate programs. The U.S. News rankings for professional schools, such as those for education, medicine and law, are highly respected and engender the sort of administrative adaptation and gaming described earlier. The rankings for doctoral programs are a disaster, however, as they are entirely reputation-based and rely on samples that are too small and have remarkably high nonresponse rates, which makes an already inexact science worse.

A 2010 ranking of doctoral programs conducted by the National Research Council was such a trainwreck – it was delayed multiple times and used outdated data – that some departments actually disavowed the rankings before they were released. A member of the committee that designed the ranking wrote a public explication on why it was a scientific failure. The college rankings enterprise seems to collapse when it is subject to the exacting and scientifically rigorous expectations of academics.

The Carnegie Classification

The most authoritative categorization of colleges is the Carnegie Classification, which has existed since the 1970s. Tellingly, it is not a ranking, but a comprehensive sorting of colleges and universities based on their research and teaching missions. To use California’s public higher education system as an example, University of California schools are all Doctoral Universities with Highest Research University – commonly dubbed “R1s” – California State University schools are large “Master’s Universities” (because the MA is the highest degree awarded there) – and California Community Colleges run the gamut of the “Associate’s Colleges” category. While these are broad categorizations, they may be the fairest way to understand which institutions can regard each other as peers, precisely because they are categorizations and not rated evaluations.

UCLA, ranked

If you tried to find UCLA’s position in every single college ranking of major or minor repute, you’d find that the school is all over the place – as high as first (US News Public Universities) and as low as 48th (Forbes), according to the most recent editions of both. So we know that UCLA is prestigious and probably good in some way, but are unable to be any more specific than this. Even this modest consistency is remarkable. UCLA owes it to the fact that the school belongs to a very small pantheon of the world’s best universities that are internationally prestigious research powerhouses. But if you move even modestly down the order – say, to UC Santa Barbara, or any flagship university of a state not named Michigan – you will find absurd levels of fluctuation between rankings.

This occurs, among many reasons, because any ranking that relies on reputation is going to be affected by human limitations in how many schools people can recall. Rankings, then, are only good for developing the most general idea of how good a school is – and each ranking is only somewhat instructive for one or two variables, such as post-graduation income or quality of instruction. The number appended to each school is less useful than the ranking’s efforts to collect various data on schools into one place. They are not evaluative tools for schools in any way.

Something tells me, though, that this won’t stop us from telling everyone we know that we go to the best public university in the country.